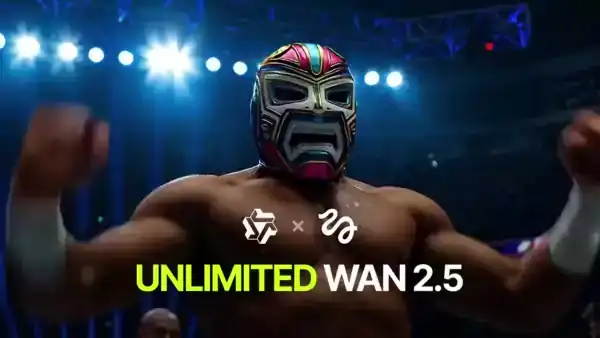

Welcome The New Era of Controllable AI Video

Kling 2.6 Motion Control is a specialized multimodal model that understands the physics of the human body and the nuances of cinematic camera movement - this means the ability to create consistent, high-fidelity marketing assets that were previously only possible with a full camera crew and weeks of post-production.

1. Kling 2.6: Motion Control Introduction

At its core, Motion Control allows you to take a Reference Image (your character) and a Motion Reference Video (the action) and fuse them together. The AI applies the movement, expression, and pacing of the video to your static character while maintaining their identity.

Unlike previous "image-to-video" iterations that often guessed the motion, Kling 2.6 allows for granular direction. Whether it is a subtle hand gesture or a high-octane martial arts kick, the model acts as a digital puppeteer.

The Motion Control feature represents a significant leap forward in generative video. Here are the standout capabilities of this new system:

1.1 Complex Motion Handling & Athletics

It can execute complicated sequences like dance routines or martial arts without losing character coherence. The model understands weight transfer and momentum, meaning if your reference video shows a heavy stomp or a high jump, the generated character will reflect that physical impact realistically.

1.2 Precision Hand & Finger Performance

Hands have notoriously been a weak point for AI video. This feature specifically improves finger articulation and hand movements by mimicking real footage.

1.3 Scene & Environment Flexibility

You aren't just stuck with the reference video's background. You can use text prompts to change the environment (e.g., "A corgi runs in, circling around a girl's feet") while the character continues their referenced motion.

1.4 Advanced Camera & Perspective Modes

Kling 2.6 gives you granular control over how the camera interprets your reference. It offers distinct orientation modes that dictate how strictly the AI should follow the reference video's camera moves versus the original image's framing.

2. How to Use Motion Control: A Step-by-Step Guide 2.1 Preparing the Perfect Source Image

Your output is only as good as your starting point. When preparing an image in Higgsfield for Kling 2.6 motion:

Limbs and Obstructions: Ensure the character's limbs are visible. If a character has their hands in their pockets in the image, but the motion reference requires them to wave, the AI will have to "hallucinate" the hands, which often leads to 6-fingered glitches or blurry textures.

Negative Space: Leave "breathing room" around the subject. If the character is going to dance or move their arms wide, they need space within the frame to do so without clipping.

2.2 Selecting a Motion Reference Video

The reference video (the "driving" video) acts as the skeleton for your generation.

Simplicity is King: Choose videos with a clear subject and a clean background. High-contrast videos where the actor's silhouette is distinct work best.

Framing Alignment: If you want a close-up of a face talking, use a close-up reference. If you use a full-body walking reference for a portrait-shot image, the AI will struggle to map the scale, resulting in a "shaking" or "warped" face.

2.3 Simply Generate

Simply hit Generate to transform your assets into broadcast-quality animations. The result is a downloadable masterpiece optimized for TikTok, Instagram, or YouTube

Practical Use Cases with Higgsfield

Virtual Influencers: Create a consistent brand mascot. Use your own team members as motion references to give the mascot a "human" and "relatable" personality without needing a studio.

Product Demos: Use Motion Control to show a hand interacting with a digital interface or a physical product.

Localizing Content: Take a single "Hero Video" and use different character images (diverse ethnicities, different age groups) while keeping the exact same motion. This allows for global campaign localization at zero extra filming cost.

Maximize Motion Control with Kling 2.6

Stop guessing and start directing with the most advanced Motion Control engine in AI video. Upload your reference video to Higgsfield and watch your characters mirror every move with flawless accuracy.